This builds upon my previous post about setting up drizzle with postgres, expanding it to include an api server written in go and using minio for document storage, which is an S3 compatible locally-deployable storage server.

An example/template can be found in this repo

Motivation

I'm still working on this environment & setup for one of my side projects chamber but this took me quite a while to get working & getting all the configurations correct. For this project, I wanted to create a server that could be deployed with minimal memory impact so it could be hosted on a platform like railway without incurring huge costs. This is the reason I landed on using go.

Go

I wrote this very blog service using go and I enjoyed the low-level feel of writing out the functions. However, in that project I didn't make use of docker at all, which is something that was a requirement for this side project, since there would be multiple developers working on it at once & ideally could be deployed multiple times with just the one image.

Drizzle

I probably should've used an ORM in the same language as the API server, so that I could get proper typing supports. I could've chose to written the server in typescript using bun, sure the memory impact would've been larger but the developer experience might have been better. But I've found over the past year that everytime I use drizzle in a complex enough application I end up writing raw sql anyway. The reason behind using drizzle for me was to have the schema declaration be defined in code that is easy to change, and have it generate migrations automatically. Hopefully this will mean I can make changes in the future to the schema and not have to manually modify/migrate the production database.

Minio

It's open source, able to be ran locally and is S3 compatible. I'll be honest I didn't spend a whole lot of time looking into alternatives, this checks all the 3 boxes I needed for this project. One thing that is currently in the stack setup is having to download the mc client in testing, which makes the test image huge & takes forever to install. This is required in order to upload test files to the buckets before running any of the testing processes, but I'm considering just writing a simple go or bun script to do this, and avoid having to install another program into the test container.

Testing

The docker compose script will run both unit tests, written in the go project, and end-to-end tests written in bun. I went with bun tests because they're fast & easy to write, and since my front-end will be the one consuming the api, it makes sense to have the end-to-end tests written in javascript.

Important Details

This project makes use of two different .env files when developing locally. The tests will run using .env.test and then to actually run the app it will source from .env. Importantly, after changing the minio or database credentials in either .env file, you should remove & rebuild the containers for database and minio. This is a problem that I ran into and took forever debugging, saying I had the wrong password for the database during tests, despite it looking at the exact same .env.test file.

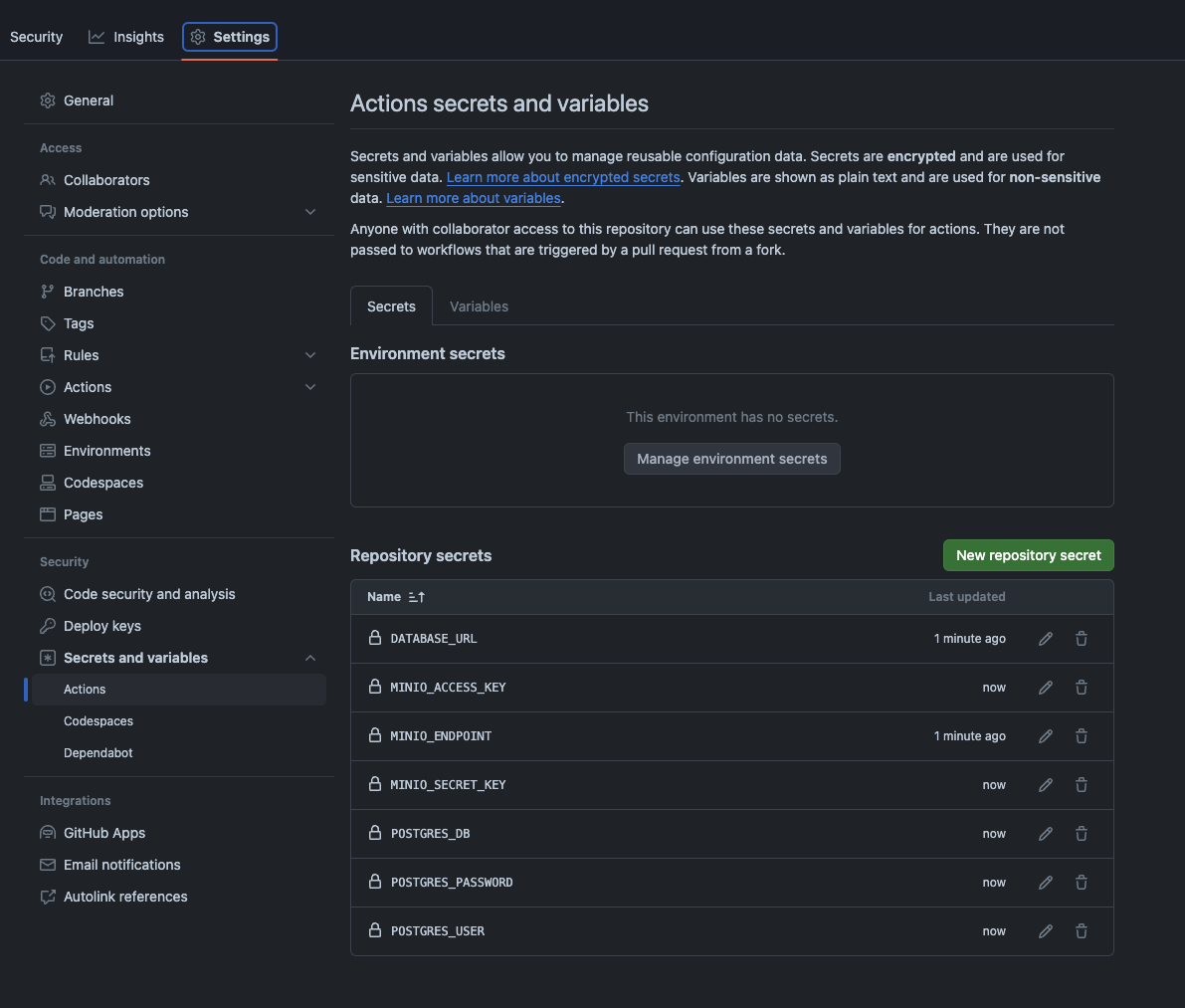

In order for the github action to work properly you will need to define repository secrets. I recommend creating special passwords & usernames for these just to be extra-safe rather than sorry.

Development

Database

Located in the /database sub-directory, is a bun project with drizzle & postgres installed. You define the schema in the schema.ts file following the normal implementation as described in the drizzle docs. The example schema.ts:

import { sql } from 'drizzle-orm';

import { pgTable, text, timestamp, uuid } from 'drizzle-orm/pg-core';

export const user = pgTable('user', {

id: uuid('id').primaryKey(),

email: text('email'),

created_at: timestamp('created_at').default(sql`now()`),

updated_at: timestamp('updated_at').default(sql`now()`)

});

There are a couple different commands & an important note to read when testing locally inside the readme.md that I'll include below

### commands

- `bun reset` resets the migrations tracking

- `bun generate` generates sql documents for migrations

- `bun migrate` applies the migrations to the database

- `bun migrate:test` applies migrations to the test database

### todo

currently you have to change the DATABASE_URL to point to `127.0.0.1` instead of the database service

name, since this script isn't run within the docker context. either need some way of hotswapping

this into the database url or running the migration within the docker scripts.

Testing

End-to-end tests are described in the /testing sub-directory. Here is an example of the tests included in the example repo:

import { describe, it, expect } from "bun:test";

describe("Go server endpoints", () => {

it("should return 'world' for GET /hello", async () => {

const response = await fetch("http://127.0.0.1:8080/hello");

const result = await response.text();

expect(result).toBe("world");

});

it("should return contents of test.txt for GET /test/fetch", async () => {

const response = await fetch("http://127.0.0.1:8080/test/fetch");

const result = await response.text();

expect(result).toBe("This is a test file.\n");

});

it("should return 200 status for GET /test/database", async () => {

const response = await fetch("http://127.0.0.1:8080/test/database");

expect(response.status).toBe(200);

const result = await response.text();

expect(result).toBe("Database is running");

});

});

Application

The main go application in the example is under /app and just contains some basic endpoints used for the end-to-end tests. This currently still uses gorrila/mux, as this is what was recommended when I wrote my blog service, however I believe the core library since 1.22 can now perform this, so I may look into updating this stack at some point (read more).

Here is the main.go file:

package main

import (

"database/sql"

"fmt"

"log"

"net/http"

"os"

"github.com/gorilla/mux"

"github.com/minio/minio-go/v7"

"github.com/minio/minio-go/v7/pkg/credentials"

_ "github.com/lib/pq"

)

var minioClient *minio.Client

func main() {

// Initialize MinIO client

var err error

minioClient, err = minio.New(os.Getenv("MINIO_ENDPOINT"), &minio.Options{

Creds: credentials.NewStaticV4(os.Getenv("MINIO_ACCESS_KEY"), os.Getenv("MINIO_SECRET_KEY"), ""),

Secure: false,

})

if err != nil {

log.Fatalln(err)

}

// Initialize router

r := mux.NewRouter()

r.HandleFunc("/hello", HelloHandler).Methods("GET")

r.HandleFunc("/test/fetch", FetchFileHandler).Methods("GET")

r.HandleFunc("/test/database", TestDatabaseHandler).Methods("GET")

// Start server

http.Handle("/", r)

log.Println("Server started on :8080")

log.Fatal(http.ListenAndServe(":8080", nil))

}

func HelloHandler(w http.ResponseWriter, r *http.Request) {

fmt.Fprintf(w, "world")

}

func FetchFileHandler(w http.ResponseWriter, r *http.Request) {

object, err := minioClient.GetObject(r.Context(), "testbucket", "test.txt", minio.GetObjectOptions{})

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

defer object.Close()

stat, err := object.Stat()

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

http.ServeContent(w, r, stat.Key, stat.LastModified, object)

}

func TestDatabaseHandler(w http.ResponseWriter, r *http.Request) {

db, err := sql.Open("postgres", os.Getenv("DATABASE_URL"))

if err != nil {

log.Fatalf("Error opening database: %q", err)

http.Error(w, "Database connection failed", http.StatusInternalServerError)

return

}

defer db.Close()

if err = db.Ping(); err != nil {

log.Fatalf("Error pinging database: %q", err)

http.Error(w, "Database is not running", http.StatusInternalServerError)

return

}

w.WriteHeader(http.StatusOK)

fmt.Fprint(w, "Database is running")

}

Deployment

The real magic of this stack, and what caused the most headaches, was the docker-compose setup.

I've included a Makefile that simplifies a lot of the docker-compose scripts into simple commands:

clean-build: stop

docker-compose --env-file .env build test-db db minio

docker-compose --env-file .env build app --no-cache

docker-compose --env-file .env build test --no-cache

build: stop

docker-compose --env-file .env build test-db db minio

docker-compose --env-file .env build app

infrastructure:

docker-compose --env-file .env up db minio -d

stop:

docker-compose down

docker-compose down --remove-orphans

run: stop build infrastructure

docker-compose --env-file .env up app

test: stop

docker-compose --env-file .env.test build test-db minio

docker-compose --env-file .env.test up test-db minio -d

docker-compose --env-file .env.test build test

docker-compose --env-file .env.test run test

And here is the docker-compose script:

services:

app:

build:

context: .

dockerfile: Dockerfile

image: chamber-app

profiles: ["server"]

depends_on:

- db

- minio

ports:

- "8080:8080"

environment:

DATABASE_URL: ${DATABASE_URL}

MINIO_ENDPOINT: ${MINIO_ENDPOINT}

MINIO_ACCESS_KEY: ${MINIO_ACCESS_KEY}

MINIO_SECRET_KEY: ${MINIO_SECRET_KEY}

POSTGRES_DB: ${POSTGRES_DB}

POSTGRES_USER: ${POSTGRES_USER}

POSTGRES_PASSWORD: ${POSTGRES_PASSWORD}

db:

image: postgres

environment:

POSTGRES_DB: ${POSTGRES_DB}

POSTGRES_USER: ${POSTGRES_USER}

POSTGRES_PASSWORD: ${POSTGRES_PASSWORD}

volumes:

- postgres_data:/var/lib/postgresql/data

ports:

- "5432:5432"

test-db:

image: postgres

environment:

POSTGRES_DB: ${POSTGRES_DB}

POSTGRES_USER: ${POSTGRES_USER}

POSTGRES_PASSWORD: ${POSTGRES_PASSWORD}

volumes:

- test_data:/var/lib/postgresql/data

ports:

- "5432:5432"

minio:

image: minio/minio

command: server /data

environment:

MINIO_ROOT_USER: ${MINIO_ACCESS_KEY}

MINIO_ROOT_PASSWORD: ${MINIO_SECRET_KEY}

volumes:

- minio_data:/data

ports:

- "9000:9000"

- "9001:9001"

test:

build:

context: .

dockerfile: Dockerfile.test

profiles: ["test"]

depends_on:

- test-db

- minio

environment:

DATABASE_URL: ${DATABASE_URL}

MINIO_ENDPOINT: ${MINIO_ENDPOINT}

MINIO_ACCESS_KEY: ${MINIO_ACCESS_KEY}

MINIO_SECRET_KEY: ${MINIO_SECRET_KEY}

POSTGRES_DB: ${POSTGRES_DB}

POSTGRES_USER: ${POSTGRES_USER}

POSTGRES_PASSWORD: ${POSTGRES_PASSWORD}

volumes:

postgres_data:

test_data:

minio_data:

The reason for having a seperate db and test-db service was because it will keep the username and password variables from when the volume was built, meaning each time you wanted to switch between testing and development enviornments, you would have to delete & rebuild everything.